Advantages

All-Weather & All-Time Performance

High Cost-Effectiveness

Unified Software-Hardware Architecture

High Safety & Reliability

Scalable ODD

Modularized Delivery

Application Scenarios

G-PAL Driving-Parking Integrated Driving Assistance System

High Performance & HD-Map Free

11V5R Mid-high Compute Domain Controller

(J6M/2*J6M/MDC 510Pro/MDC 610/Orin X)

Delivers better computational performance capable of supporting ADAS applications

including Urban NOA, empowering the market competitiveness of OEMs.

4D Imaging Front Radar

4D Imaging Corner Radar

Front View Long-Focal Camera

Front View Wide-Angle Camera

Rear View Camera

Surround View Camera

Side View Camera

Domain Controller

D2D

Urban NOA

Highway NOA

Roundabout Navigation

General Obstacle Avoidance

Unprotected Left Turn

11V

Front view: 8MP *2

Rear view: 3MP *1

Surround view: 3MP *4

Side view: 3MP *4

5R

4D imaging front radar (FR6C) *1

4D imaging corner radar (CR6C) *4

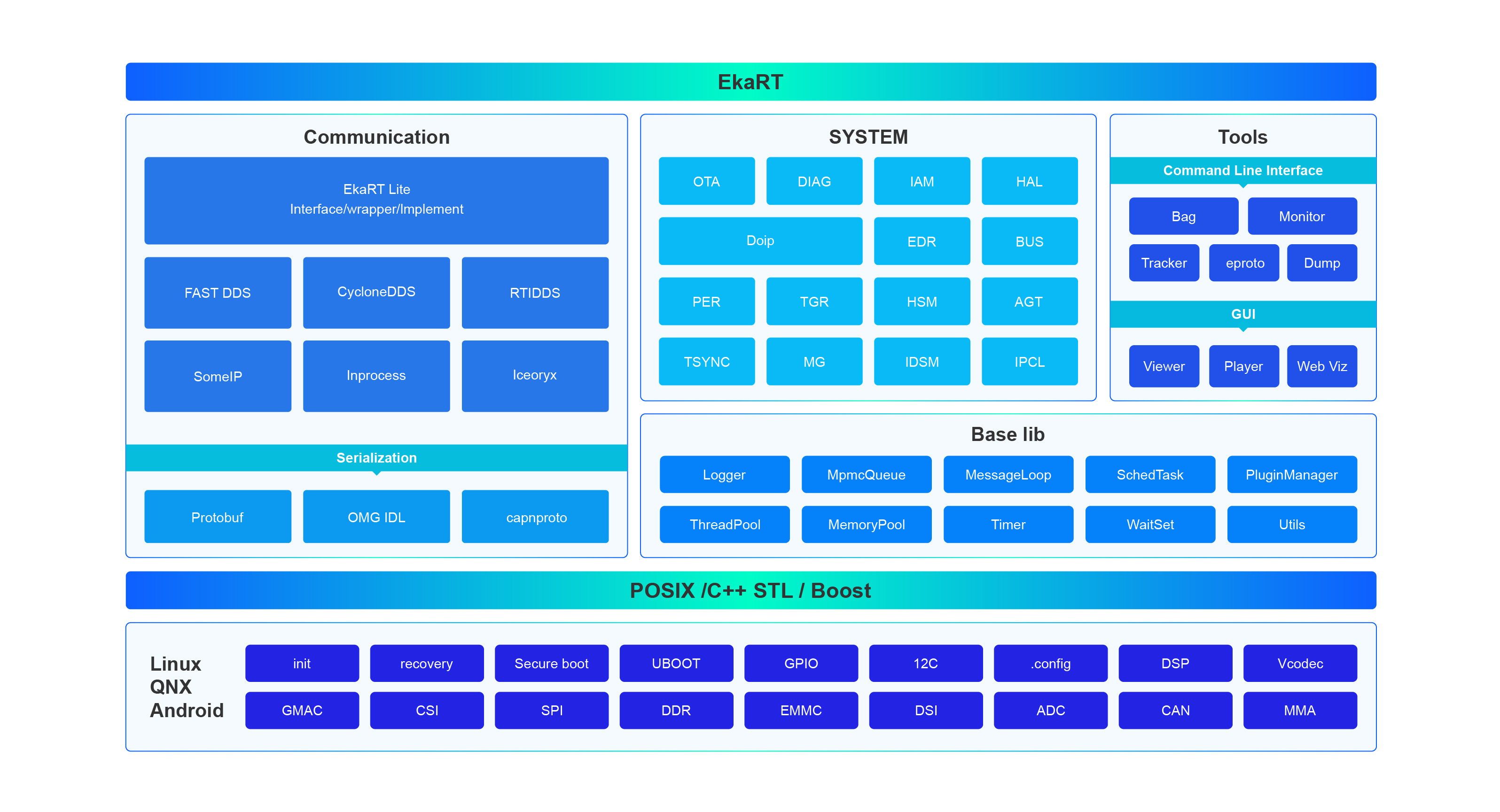

Software Architecture

Functions

创新技术

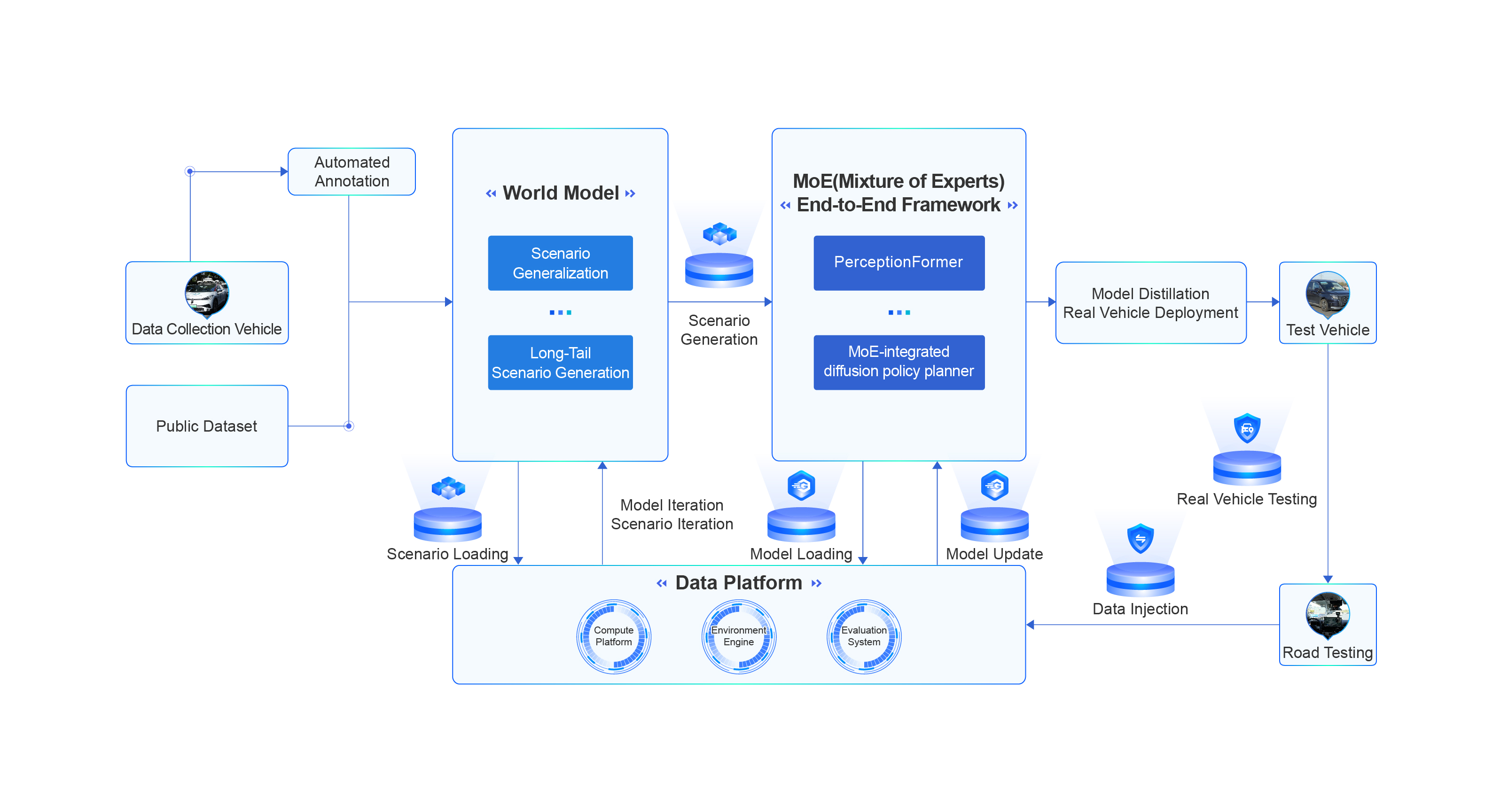

World Model and Large Model Coupling-Driven End-to-End Technology

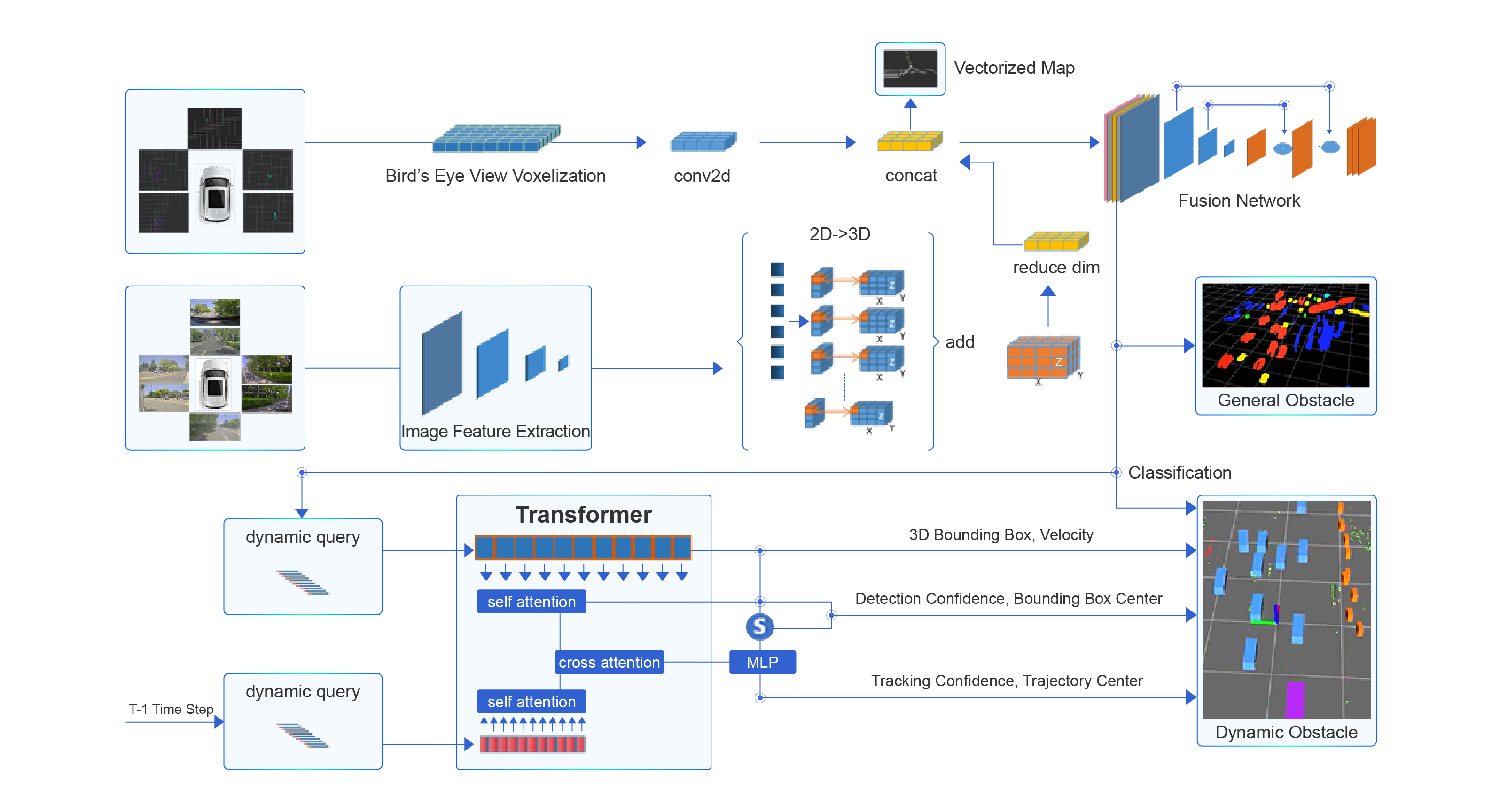

GGPNet: G-PAL Generalized Perception Network

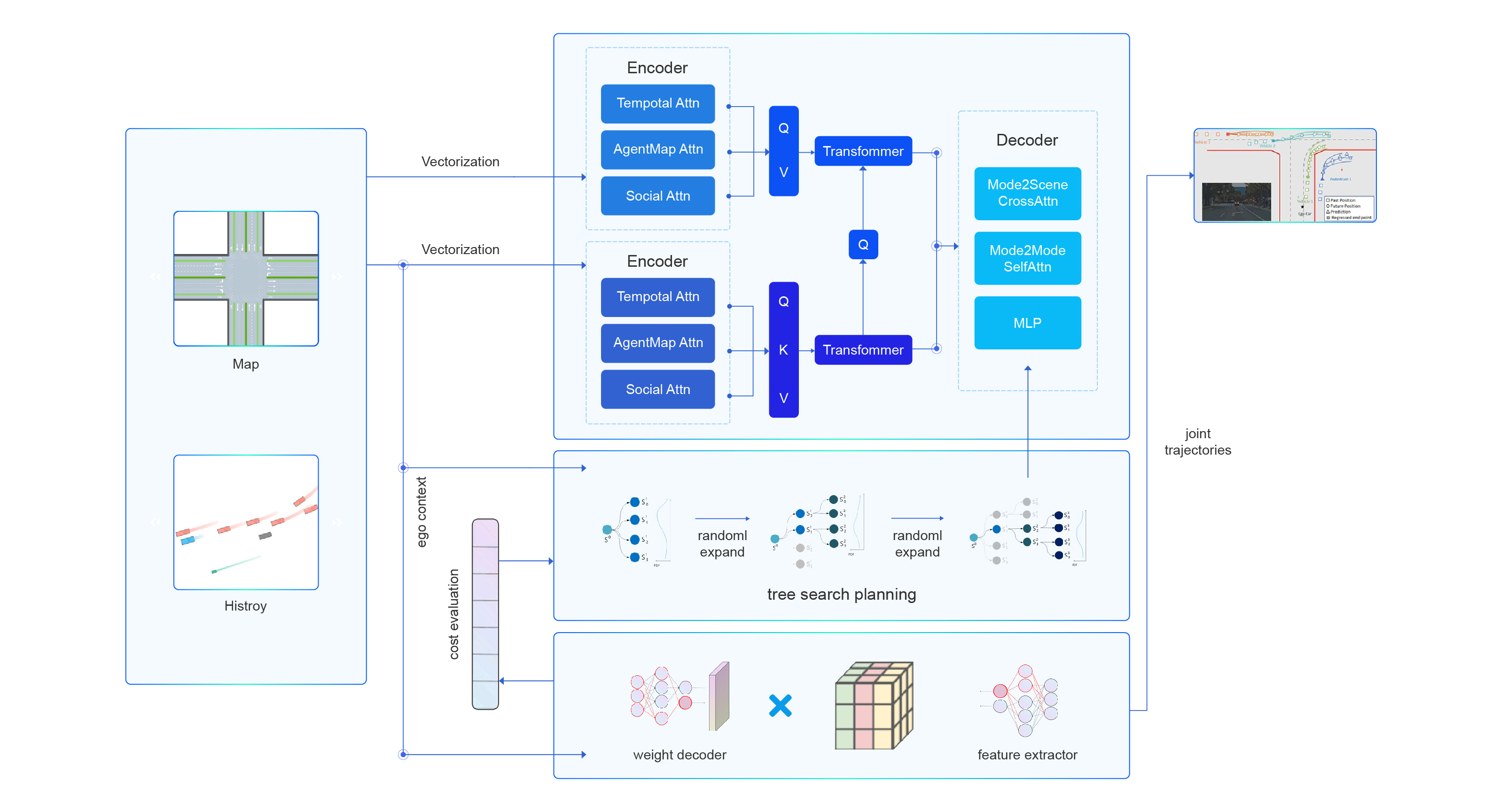

Integration Strategy of Predictive Decision-Making Network Model and MCTS Decision Tree

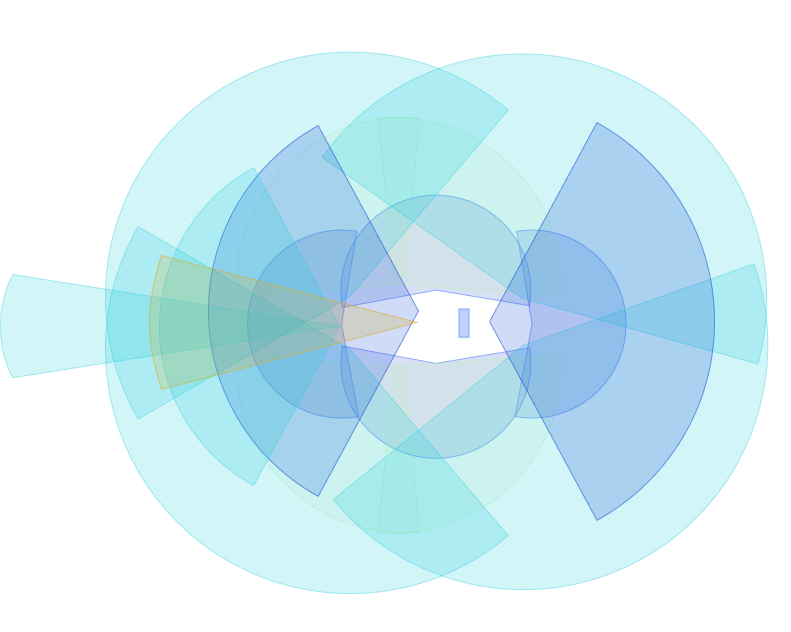

360° "Vision+4D mmWave Imaging Radar" Dynamic/Static Perception & General Obstacle Detection

High-Robustness and High-Reliability Planning and Control Framework

EkaRT: Cross-Domain Multi-Platform Middleware

Watch video

Watch video